Sohyun Lee*, Jaesung Rim*, Boseung Jeong, Geonu Kim, ByungJu Woo, Haechan Lee, Sunghyun Cho†, Suha Kwak†

* Equal contribution. † Corresponding authors.

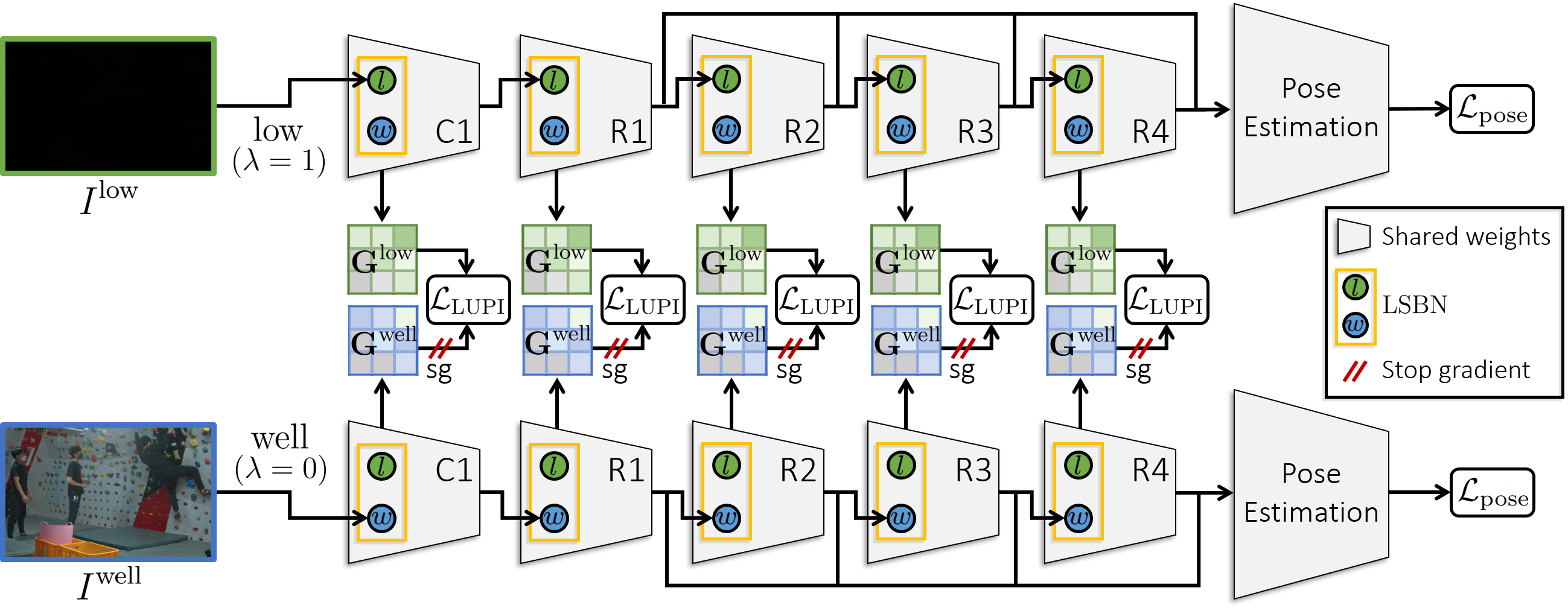

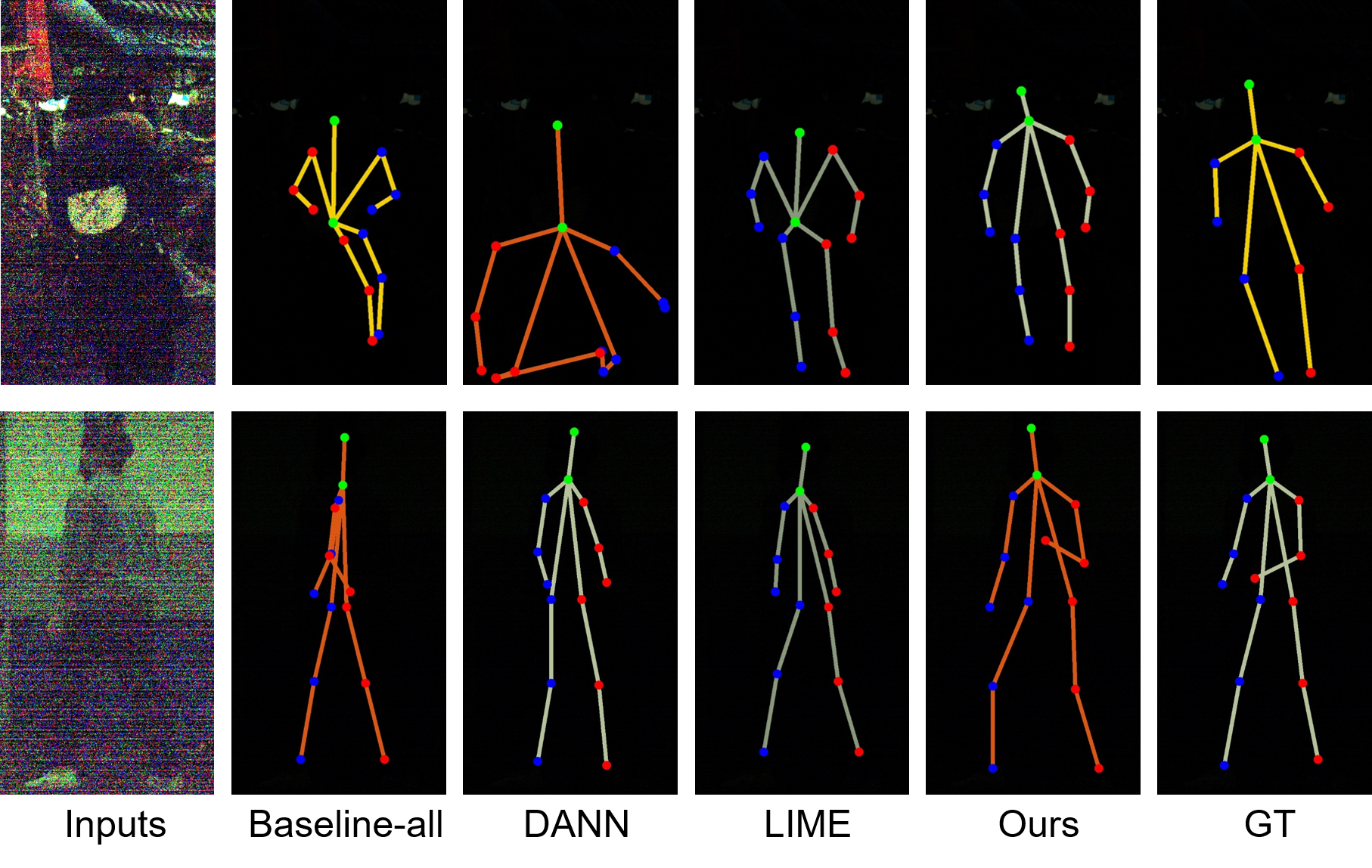

We study human pose estimation in extremely low-light images. This task is challenging due to the difficulty of collecting real low-light images with accurate labels, and severely corrupted inputs that degrade prediction quality significantly. To address the first issue, we develop a dedicated camera system and build a new dataset of real low-light images with accurate pose labels. Thanks to our camera system, each low-light image in our dataset is coupled with an aligned well-lit image, which enables accurate pose labeling and is used as privileged information during training. We also propose a new model and a new training strategy that fully exploit the privileged information to learn representation insensitive to lighting conditions. Our method demonstrates outstanding performance on real extremely low-light images, and extensive analyses validate that both of our model and dataset contribute to the success.

| Methods | LL-N | LL-H | LL-E | LL-A | WL |

|---|---|---|---|---|---|

| Baseline-low | 32.6 | 25.1 | 13.8 | 24.6 | 1.6 |

| Baseline-well | 23.5 | 7.5 | 1.1 | 11.5 | 68.8 |

| Baseline-all | 33.8 | 25.4 | 14.3 | 25.4 | 57.9 |

| LLFlow + Baseline-all | 35.2 | 20.1 | 8.3 | 22.1 | 65.1 |

| LIME + Baseline-all | 38.3 | 25.6 | 12.5 | 26.6 | 63.0 |

| DANN | 34.9 | 24.9 | 13.3 | 25.4 | 58.6 |

| AdvEnt | 35.6 | 23.5 | 8.8 | 23.8 | 62.4 |

| Ours | 42.3 | 34.0 | 18.6 | 32.7 | 68.5 |

Please feel free to contact us with any feedback, questions, or comments